Teaching is hard, always. But there is a world of difference between the difficulties of teaching one person, vs. the difficulties of teaching a (large) class, which can give us some useful insights. One-on-one, the teacher at least has the possibility of probing what the student is actually thinking on each concept, and adjusting the lesson accordingly, via two-way communication. By contrast, in a large class, instructors can neither see what every student is actually thinking on each concept, nor identify the specific misconceptions that block many students.

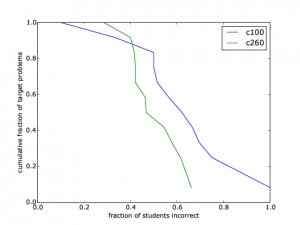

How big a problem are such “blindspots” in our education system? Below are some data about a problem most instructors are unhappily familiar with: the ineffectiveness of prerequisites. In a class that required a Probability / Statistics prereq, students were asked simple problems using those probability concepts. On average students made serious conceptual errors on about half the problems (Fig. 1, “c260” curve). Depressingly, their rate of conceptual errors was only 10% lower than students with no Probability / Statistics prereq training at all (“c100” curve). Studies in many STEM fields have found the same result.

- These students passed the Probability & Statistics prerequisite course, yet do not understand half the basic concepts — a large blindspot not only for the prereq instructor and students, but also for any subsequent instructors whose classes depend on that prereq.

- Blindspots require an independent, transfer metric. I’ll refer to these “transfer metrics” as target problems, because they should be chosen from representative real-world problems students ought to be able to solve with the concepts. Crucially, target problems must be about real world challenges that were not covered in class, so that they measure transfer exactly as students will experience in the real world. (but not on typical exams, because students consider this “unfair” — “that wasn’t covered in class!”)

- Whereas blindspots are the “unknown unknowns” of teaching, target problems provide a direct, empirical way of detecting them, turning them into “known unknowns” that we can then solve.

Courselets.org is an online active-learning platform using target problems as a teaching method that detects and solves blindspots in a scalable way. Concretely, it helps students identify every specific misconception that is blocking them, and gives them the specific exercises to reliably overcome them. In a traditional class setting, mistakes are always treated as a bad thing (“5 points off!”), but in Courselets they become immediate stepping stones to fixing your understanding and boosting your ability to solve real-world (transfer) problems.

As you can see in this video, the student interface is a simple “chat” where the student works out target problems as if in conversation with the instructor and other students:

- target problems are typically open response, in which the student types text (and equations or pictures) both articulating a specific answer (not guessable), and 1-2 sentences of their thought process leading to this answer.

- the student engages in self-assessment and peer instruction both with other students’ answers and the expert answer.

- if the student made an error, they immediately assess whether it fits any of the common errors already identified.

- the student then immediately works on error resolution exercises addressing their specific misconception(s).

- if the student is still confused (or made a novel error), other users (instructor, learning assistants, other students) are prompted to provide next steps (e.g. add their error to the list of common errors; suggest exercises etc.).

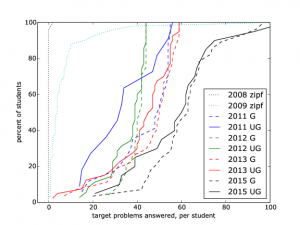

Does directly addressing each student’s blindspots in this way make a difference? Here’s a quick summary of data from a five-year study of a Bioinformatics / Probabilistic Modeling course in the UCLA Computer Science department:

- the average student in the course had 20 misconceptions to fix, and required attempting around 40 target problems to identify these misconceptions.

- Whereas student engagement in answering target problems verbally (2009) fell far short of that number (required to identify and fix their misconceptions), posing the same target problems using Courselets (2011-2015) successfully engaged each student to answer >60 target problems on average. Moreover, it eliminated disparities among groups (e.g. undergrads vs. graduate students; men vs. women; bottom 50% vs. top 10%).

- even common misconceptions (blocking 30% or more of all students) were found to be not directly addressed by the textbook. While this may be unsurprising (textbook authors do not write about the wrong ways of thinking), it raises a very practical question: if these misconceptions are not addressed by the lecturer or textbook, where are students supposed to turn to understand and resolve them?

- even limited sampling of student responses on Courselets rapidly discovered most student misconceptions; e.g. on average the top 4 error models per problem covered 70-80% of student errors.

- median exam scores increased from 53% to 72-80%. Overall, after the switch, students below the median boosted their scores to the range where previously students above the median had scored before the switch.

- most interestingly, student attrition in the course dropped in every year 2011-2015 by approximately four-fold vs. the 2004-2009 lecture course format (down from 48.3% to 13.4%), especially for women students (down from 73.1% to 7.4%).